- 1. Why does application acceleration matter now?

- 2. How does application acceleration work?

- 3. Where does application acceleration live in modern networks?

- 4. How is application acceleration different from WAN optimization?

- 5. What are the technical benefits of application acceleration?

- 6. What do real-world adoption trends show?

- 7. Learn more about SD-WAN in action Read Zero Trust Branch for SD-WAN For Dummies to see how SD-WAN architecture extends to branch security and SASE.

- Why does application acceleration matter now?

- How does application acceleration work?

- Where does application acceleration live in modern networks?

- How is application acceleration different from WAN optimization?

- What are the technical benefits of application acceleration?

- What do real-world adoption trends show?

- Learn more about SD-WAN in action Read Zero Trust Branch for SD-WAN For Dummies to see how SD-WAN architecture extends to branch security and SASE.

What Is Application Acceleration? [+ How It Works & Examples]

- Why does application acceleration matter now?

- How does application acceleration work?

- Where does application acceleration live in modern networks?

- How is application acceleration different from WAN optimization?

- What are the technical benefits of application acceleration?

- What do real-world adoption trends show?

- Learn more about SD-WAN in action Read Zero Trust Branch for SD-WAN For Dummies to see how SD-WAN architecture extends to branch security and SASE.

Application acceleration is the process of improving how efficiently applications deliver data across a network.

It uses transport, protocol, content, and path optimization techniques to reduce latency, packet loss, and retransmissions. These mechanisms allow applications to maintain consistent responsiveness and throughput across cloud, mobile, and distributed environments.

Why does application acceleration matter now?

Applications no longer live in one place.

They're distributed across clouds, SaaS platforms, and data centers. Users connect from anywhere. And this creates long, unpredictable paths between endpoints. Every hop adds latency. Every wireless link adds jitter. And every security control adds processing delay.

In the past, these effects were hidden inside private networks. Today, that's no longer true.

Hybrid and remote work have shifted most user traffic onto the public internet, where first-mile instability and packet loss are common.

Encrypted connections make those delays more visible, because the network can't see or optimize the payload. Which means application performance now depends on how efficiently the transport and delivery layers handle distance, congestion, and encryption overhead.

Cloud dependency has also changed what traffic looks like.

Modern applications rely on constant API calls between services. Each transaction is small, dynamic, and personalized.

Traditional WAN optimization was built for bulk data transfer and repetitive patterns. It can't predict or cache unique, real-time content. Nor can it accelerate traffic that's fully encrypted end to end.

At the same time, security overlays such as TLS inspection, CASB (cloud access security broker), and DLP (data loss prevention) introduce additional latency. They process each session independently, increasing round-trip time and CPU load. Without compensating transport and protocol-level optimization, that overhead slows user response.

In short: application acceleration matters now because the network itself has changed. Work is mobile. Applications are dynamic. Traffic is encrypted. And the distance between users and data keeps growing.

These pressures reshaped how acceleration itself works.

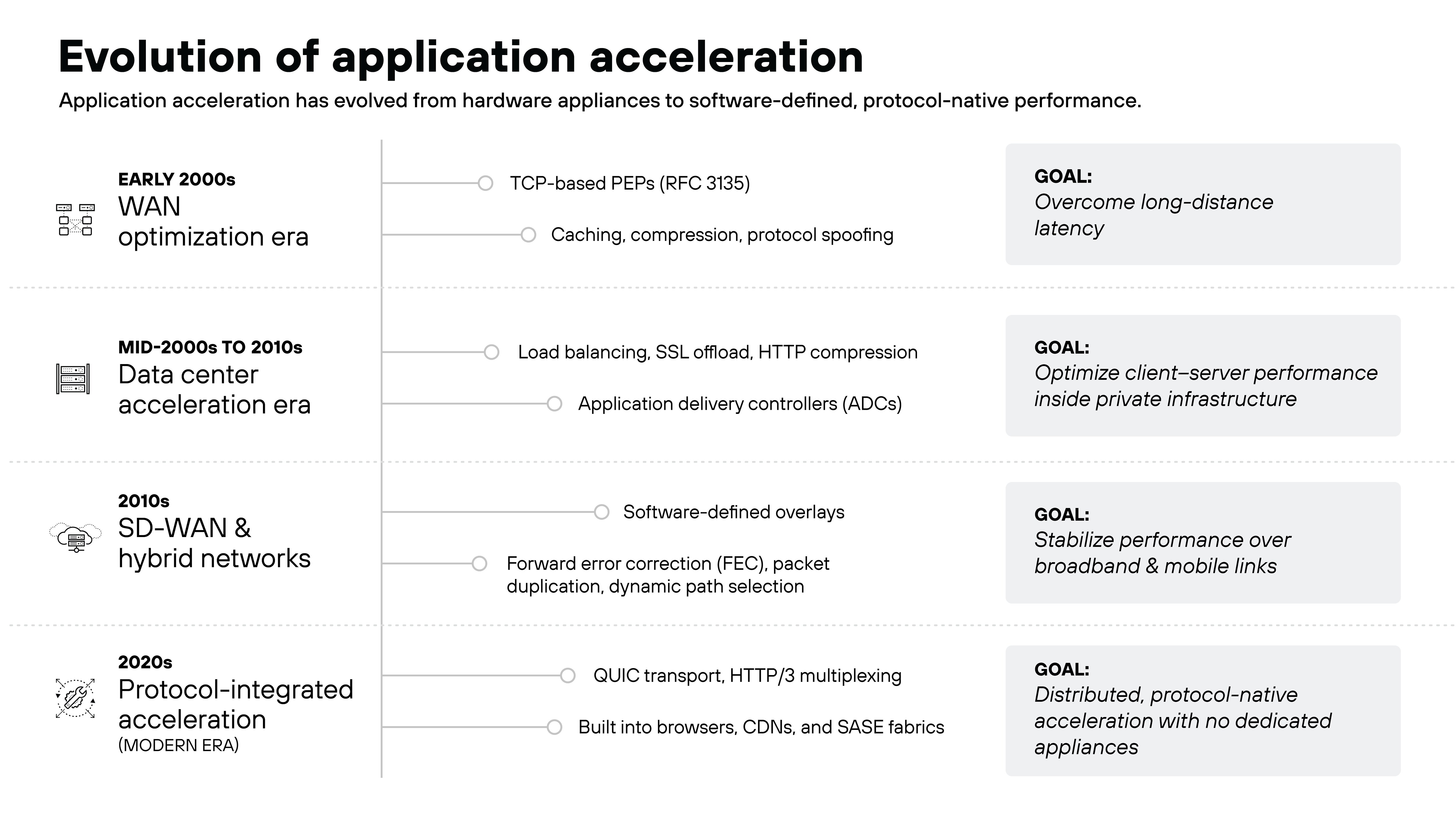

How has application acceleration evolved?

Application acceleration has progressed through several distinct phases as enterprise networking and application delivery have changed.

-

In the early years, most applications were centralized, and WAN optimization appliances handled performance improvements between sites.

These devices used TCP-based performance-enhancing proxies to accelerate traffic through caching, compression, and protocol spoofing, compensating for bandwidth and distance constraints.

-

As organizations began hosting more web-facing services, acceleration functions shifted toward the data center.

Application delivery controllers emerged to manage load balancing, SSL offload, and HTTP compression. They improved performance and reliability but remained bound to fixed infrastructure.

-

The transition to cloud and hybrid networks marked the next stage.

SD-WAN (software-defined wide area network) overlays added forward error correction, packet duplication, and dynamic path selection to maintain stable throughput over variable internet and mobile links.

Many of these techniques—such as compression and FEC—persisted from earlier generations, though their implementation moved from hardware appliances into software-defined platforms. These capabilities brought application performance management closer to the network edge.

-

Today, acceleration has become part of the protocols themselves.

QUIC and HTTP/3 integrate congestion control, loss recovery, and multiplexing directly into the transport layer, providing efficiency without hardware appliances.

In essence, application acceleration has evolved from appliance-based optimization to a distributed, software-defined capability embedded across the network and protocol stack.

How does application acceleration work?

Application acceleration works by reducing the delays and inefficiencies that slow down how applications respond over a network.

It operates across several technical layers, each addressing a different source of latency or packet loss. Together, these layers ensure that data moves more predictably between users and the applications they access.

Every layer contributes a different kind of optimization, from how packets move to how content is delivered:

-

Transport optimization focuses on the connection itself.

Traditional TCP relies on sequential packet delivery and conservative congestion control. QUIC replaces this behavior with a faster handshake, multiplexed streams, and smarter loss recovery.

It also supports 0-RTT connections that allow data to flow immediately after a session resumes. In practice, this shortens round-trip times and keeps sessions stable even when bandwidth fluctuates.

Kernel and network-interface limits still define the upper boundary of performance, but transport optimization minimizes how often those limits are reached.

-

Protocol optimization improves how application traffic is exchanged once the connection is established.

HTTP/3, built on QUIC, eliminates head-of-line blocking that slowed HTTP/2 and reuses encrypted sessions instead of re-negotiating TLS with every request. Which means fewer round trips between client and server and more efficient use of each connection.

-

Content optimization reduces the size of what must travel across the network.

Compression, caching, and deduplication lower the number of bytes in transit and shorten delivery time for static resources. However, these techniques provide little benefit for dynamic or personalized data, where every response is unique and often encrypted.

-

Path optimization manages how data travels between locations.

Techniques like forward error correction, packet duplication, and dynamic path selection help recover lost packets and balance load across multiple links. Some architectures add jitter buffering to smooth latency across Wi-Fi and mobile connections.

Together, these layers shorten round-trip times and keep throughput consistent across variable networks.

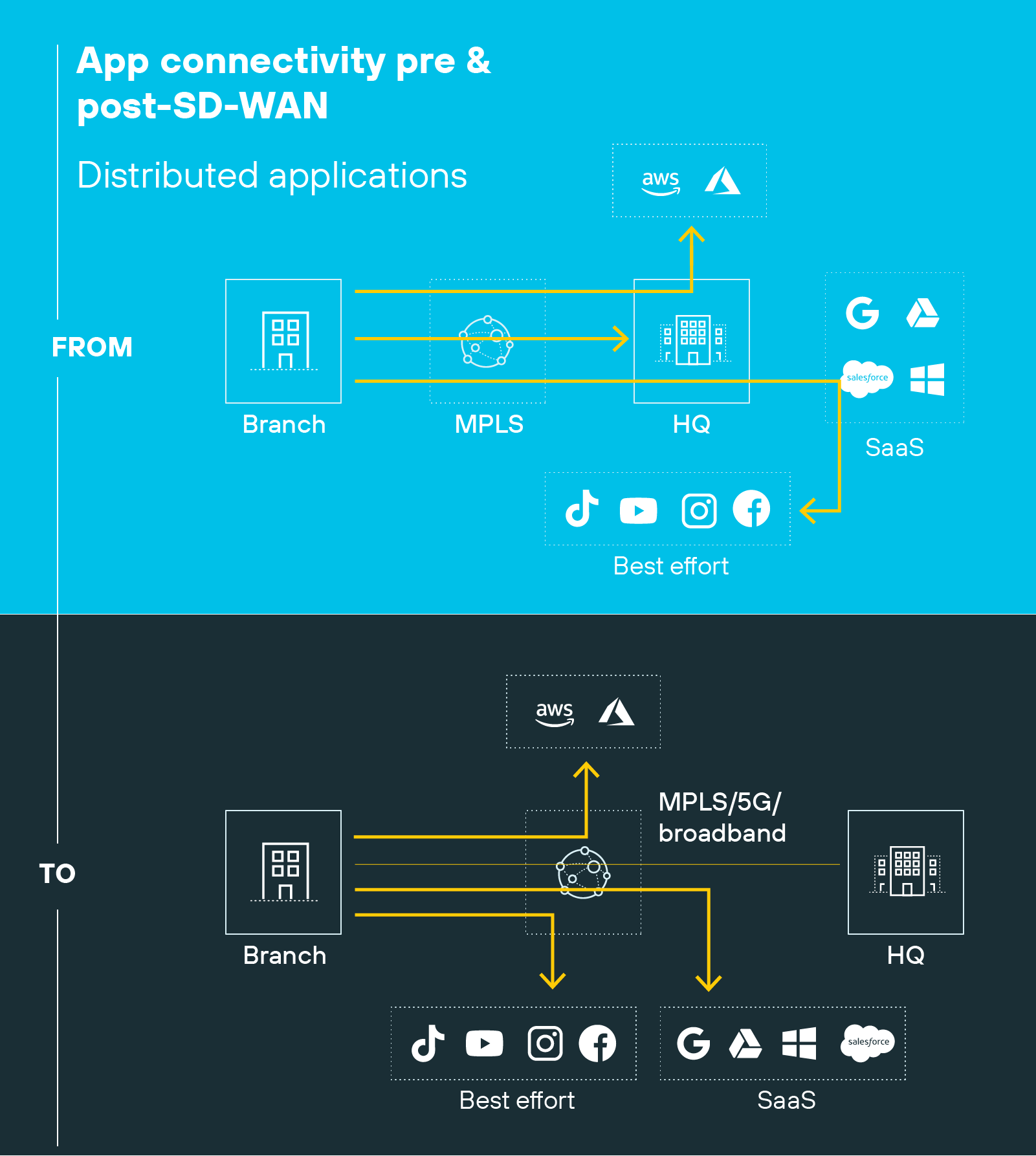

Where does application acceleration live in modern networks?

Application acceleration operates across multiple layers of the network rather than in a single device or location. Each layer contributes to improving performance by addressing latency, loss, or congestion where it occurs.

At the edge, client and endpoint agents manage first-mile conditions.

They monitor connection quality and adapt to variable Wi-Fi or mobile performance by smoothing jitter and optimizing initial transport behavior. This helps maintain consistent sessions as users move between networks.

Within edge and content delivery nodes, acceleration functions such as caching and intelligent routing deliver static and frequently accessed content from nearby servers.

These edge locations minimize round trips to the origin and reduce the impact of distance on response time.

Across the middle mile, software-defined WAN overlays and global backbone fabrics handle path optimization.

They apply forward error correction, packet duplication, and dynamic path selection to maintain throughput when internet links fluctuate. These capabilities are crucial for consistent SaaS and cloud access.

In cloud and SASE gateways, acceleration is integrated with security inspection and policy enforcement.

Traffic is optimized and secured in the same pass through the data plane, avoiding extra latency from multiple processing stages.

All of this is managed through centralized visibility and analytics.

Digital experience monitoring tools provide real-time data on latency, jitter, and packet loss, allowing policies to adapt automatically. Centralized analytics not only measure performance but also inform policy decisions, keeping optimization and security aligned.

This distributed model—spanning client to cloud—defines how performance and security now converge. Application acceleration lives everywhere that data travels, coordinated through software and informed by telemetry.

Further reading:

- What Is SD-WAN Architecture? Components, Types, & Impacts

- What Is a Cloud Secure Web Gateway?

- What Is SASE (Secure Access Service Edge)? | A Starter Guide

How is application acceleration different from WAN optimization?

Application acceleration and WAN optimization share a goal: better performance. But they achieve it in very different ways.

WAN optimization improves how the network itself operates. Application acceleration improves how efficiently applications perform for the user.

WAN optimization was built for branch-to-data-center connectivity when traffic was predictable and mostly unencrypted. It focused on throughput efficiency through techniques like compression, deduplication, and TCP optimization. The goal was to maximize bandwidth and minimize retransmissions over static links.

Application acceleration takes a broader, distributed view. It improves application responsiveness, not just transport efficiency. It combines transport, protocol, and path optimization to reduce latency and packet loss for cloud, SaaS, and mobile workloads. And it's aware of application behavior, not just network performance.

The table below summarizes the main distinctions between the two approaches.

Comparison: Application acceleration vs. WAN optimization

| Category | Application acceleration | WAN optimization |

|---|---|---|

| Primary focus | Application responsiveness and consistency | Network throughput efficiency |

| Scope | Cloud, SaaS, and distributed users | Network-wide, fixed-site links |

| Techniques used | QUIC/HTTP3, dynamic path selection, FEC, protocol and content optimization | Compression, deduplication, TCP optimization |

| Deployment model | Software-defined, protocol-integrated, and distributed across SD-WAN, SASE, and CDN architectures | Hardware or virtual appliances in data centers |

| End result | Consistent, resilient application experience across any network | Faster data transfer across static WANs |

In short: WAN optimization makes the network faster. Application acceleration makes the application perform better on any network. It's application-aware, protocol-native, and location-agnostic—no longer confined to appliances at the data center edge.

Further reading:

How is performance measured and tuned today?

Performance in modern networks is measured through continuous observation, not periodic testing.

Digital experience monitoring systems collect real-time telemetry across users, devices, and links. They track latency, jitter, and retransmissions to identify where delays originate. So visibility now extends from the user's browser to the network edge and cloud service.

The same telemetry powers adaptive control loops.

SD-WAN and SASE platforms analyze these metrics in real time and automatically adjust performance parameters. They can change path selection, apply forward error correction, or alter quality-of-service priorities based on live conditions. This keeps sessions stable even when bandwidth or signal quality fluctuates.

Measurement has also moved closer to the application layer.

Modern browsers provide early feedback using features like HTTP status 103 Early Hints and the Fetch Priority API. These mechanisms let servers signal resource importance before the full response is ready, improving perceived load time.

All of these feedback systems are what allow application acceleration to remain adaptive and context-aware rather than fixed to static rules.

Basically, application acceleration is now informed by data from every layer: client, network, and cloud. Decisions that once required manual tuning happen automatically through analytics and closed feedback loops.

Performance optimization is now continuous and data-driven. Not static configuration.

Further reading:

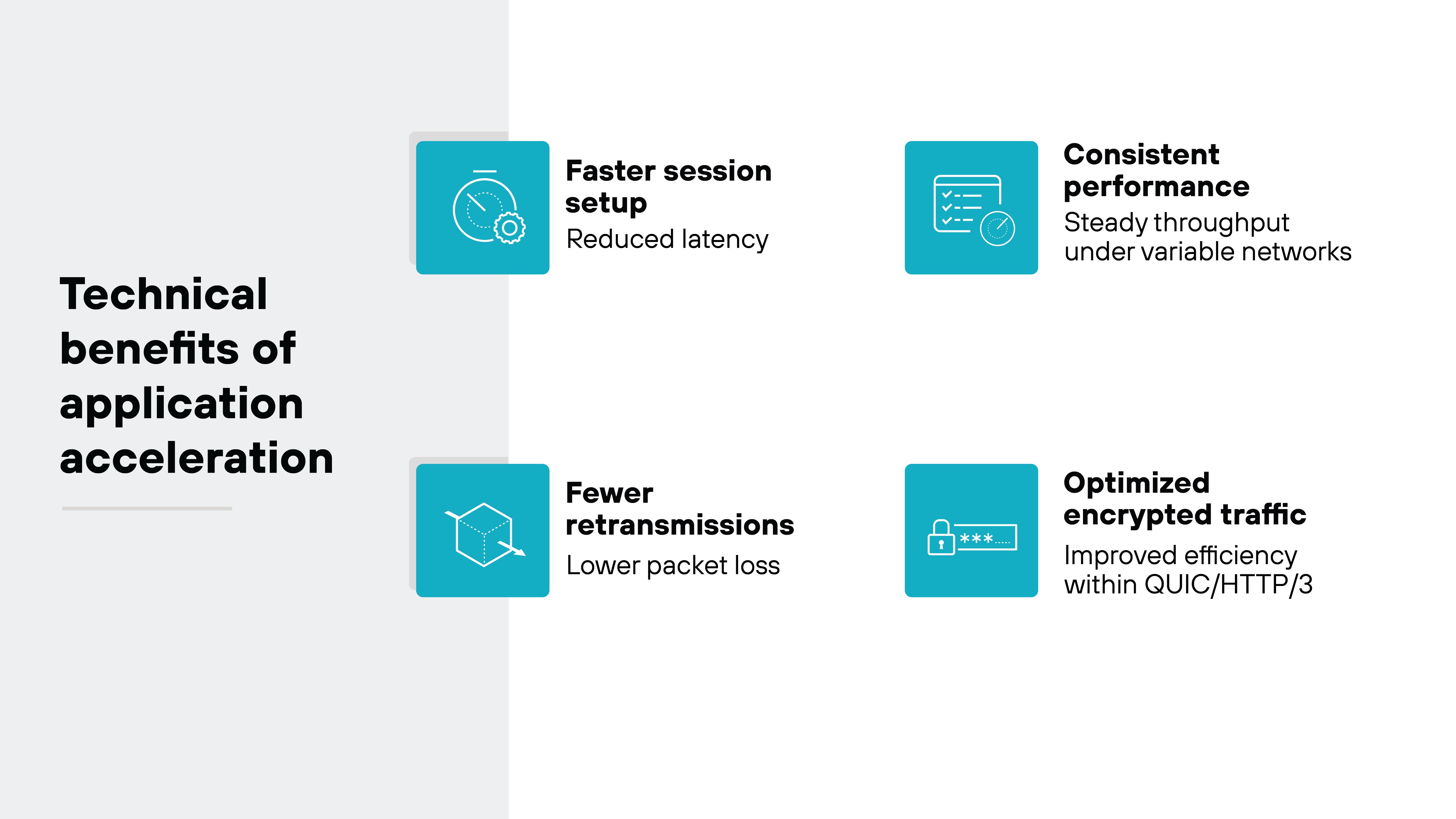

What are the technical benefits of application acceleration?

Application acceleration produces measurable network and protocol improvements rather than subjective user impressions. It enhances how quickly connections start, how reliably data moves, and how consistently applications perform across unpredictable networks.

-

One key advantage is faster session setup.

QUIC's 0-RTT handshake allows a connection to resume immediately without repeating the full negotiation cycle. That reduces latency on every reconnect and shortens total page or session load time.

-

It also minimizes the impact of retransmissions and packet loss.

Forward error correction and adaptive recovery mechanisms detect and replace missing packets before users notice a slowdown. Which means data flows continuously, even over lossy or congested links.

-

Consistency across hybrid and mobile connections is another major gain.

Acceleration techniques smooth jitter, manage variable signal quality, and optimize bandwidth use. The result is steady SaaS and remote-access performance, even when network conditions fluctuate.

-

Finally, modern acceleration improves throughput for encrypted and dynamic content.

Because optimization now operates within QUIC and HTTP/3, it enhances flow control and multiplexing without breaking encryption. This keeps security intact while increasing transfer efficiency.

Each improvement is quantifiable through metrics such as round-trip time, retransmission rate, and sustained throughput. These are observable network- and protocol-level gains. Not abstract productivity claims.

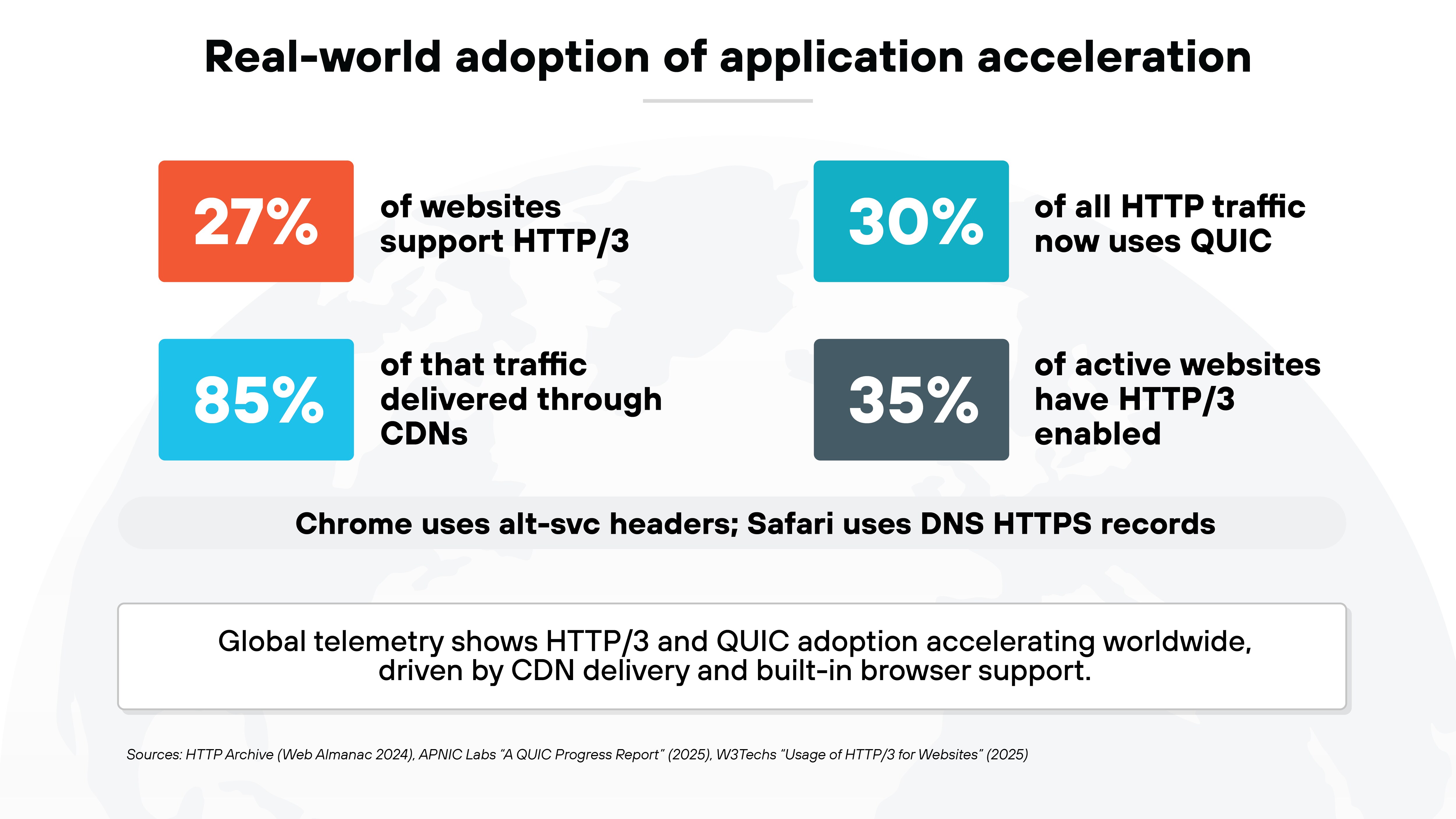

What do real-world adoption trends show?

Application acceleration is no longer theoretical. It's visible in how quickly modern web and cloud services have embraced the protocols that enable it.

In fact, adoption data from multiple global telemetry sources shows steady, measurable growth over the past two years:

The majority of HTTP/3 adoption is being driven by CDN infrastructure, which brings acceleration capabilities to the edge.

According to the 2024 Web Almanac by HTTP Archive, roughly 27% of websites now support HTTP/3 through alt-svc discovery. Nearly 85% of that traffic is delivered through content delivery networks.

APNIC Labs' QUIC Progress Report from June of 2025 shows a similar trend from a network perspective. Around 30% of all HTTP traffic now uses QUIC as its transport protocol.

Meanwhile, W3Techs Usage statistics of HTTP/3 for websites reports that approximately 35% of active websites have HTTP/3 enabled.

Adoption also differs by browser and connection trigger.

Apnic Labs' QUIC Progress Report also indicated that Chrome primarily uses alt-svc headers to discover QUIC endpoints, while Safari relies on DNS HTTPS records. This difference affects when and how clients switch to HTTP/3, but both methods are expanding quickly as providers enable both mechanisms for coverage.

The evidence points to one conclusion: application acceleration has become mainstream.

Its growth is being propelled by CDNs, integrated SASE platforms, and cloud-native delivery models.

These underlying technologies are now embedding acceleration by default, making optimized performance a baseline expectation rather than an optional enhancement. The numbers represent not just protocol adoption, but architectural change—the shift toward performance optimization built directly into the delivery layer.